点击上方蓝字关注我们

点击上方蓝字关注我们

微信公众号:OpenCV学堂

关注获取更多计算机视觉与深度学习知识

模型导出与输入输出

from ultralytics import YOLOv10"""Test exporting the YOLO model to ONNX format."""f = YOLOv10("yolov10n.pt").export(format="onnx", opset=11, dynamic=False)

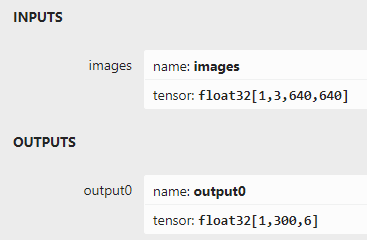

输入支持格式为:1x3x640x640输出格式为1x300x6

x1 y1 x2 y2 score classidC++推理

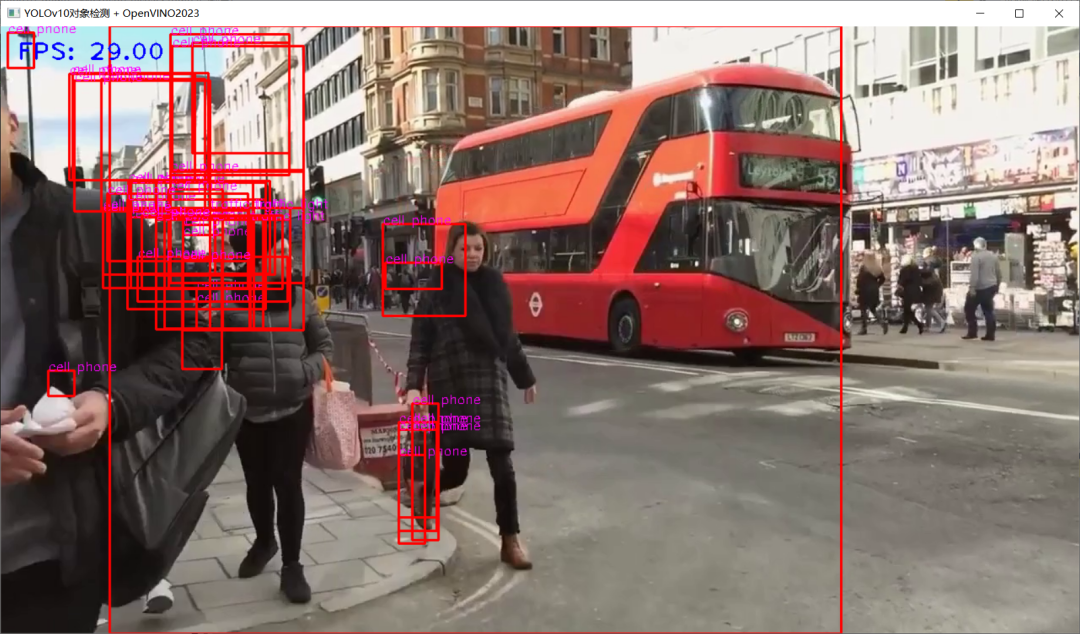

ov::CompiledModel compiled_model = ie.compile_model("D:/python/yolov10-1.0/yolov10n.onnx", "AUTO");auto infer_request = compiled_model.create_infer_request();

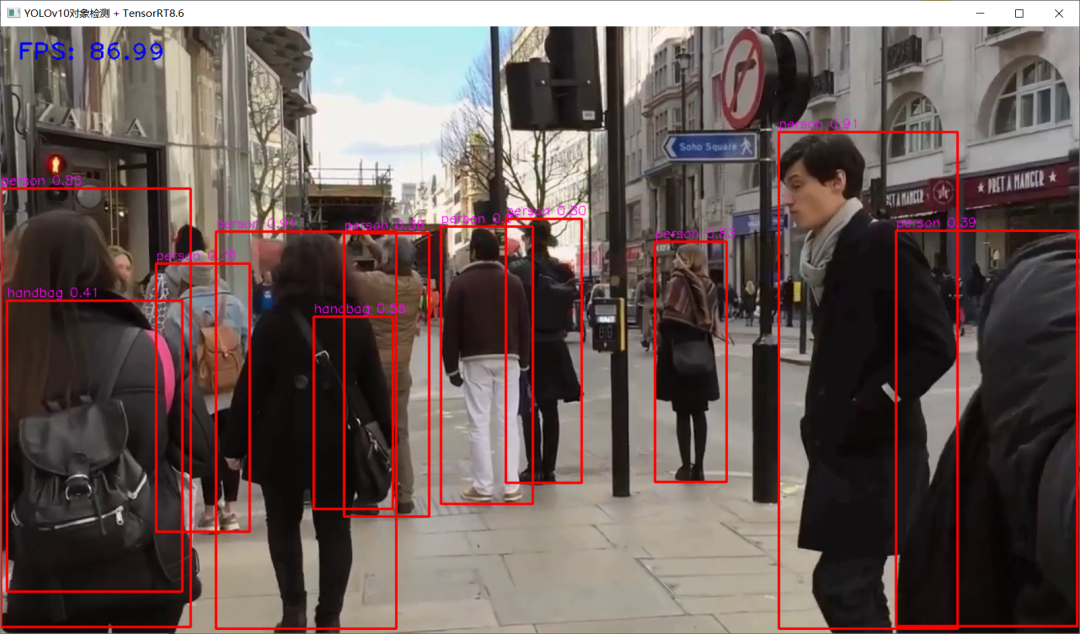

trtexec.exe -onnx=yolov10n.onnx --saveEngine=yolov10n.engineTensorRT8.6 C++ 推理演示 , 本人笔记本 显卡为3050ti

C++推理的相关代码如下:

int64 start = cv::getTickCount();

// 图象预处理 - 格式化操作

int w = frame.cols;

int h = frame.rows;

int _max = std::max(h, w);

cv::Mat image = cv::Mat::zeros(cv::Size(_max, _max), CV_8UC3);

cv::Rect roi(0, 0, w, h);

frame.copyTo(image(roi));

// HWC => CHW

float x_factor = image.cols / static_cast<float>(input_w);

float y_factor = image.rows / static_cast<float>(input_h);

cv::Mat tensor = cv::dnn::blobFromImage(image, 1.0f / 225.f, cv::Size(input_w, input_h), cv::Scalar(), true);

// 内存到GPU显存

cudaMemcpyAsync(buffers[0], tensor.ptr<float>(), input_h * input_w * 3 * sizeof(float), cudaMemcpyHostToDevice, stream);

// 推理

context->enqueueV2(buffers, stream, nullptr);

// GPU显存到内存

cudaMemcpyAsync(prob.data(), buffers[1], output_h *output_w * sizeof(float), cudaMemcpyDeviceToHost, stream);

// 后处理

cv::Mat det_output(output_h, output_w, CV_32F, (float*)prob.data());

for (int i = 0; i < det_output.rows; i++) {

float tl_x = det_output.at<float>(i, 0) * x_factor;

float tl_y = det_output.at<float>(i, 1) * y_factor;

float br_x = det_output.at<float>(i, 2)* x_factor;

float br_y = det_output.at<float>(i, 3)* y_factor;

float score = det_output.at<float>(i, 4);

int class_id = static_cast<int>(det_output.at<float>(i, 5));

if (score > 0.25) {

cv::Rect box((int)tl_x, (int)tl_y, (int)(br_x - tl_x), (int)(br_y - tl_y));

rectangle(frame, box, cv::Scalar(0, 0, 255), 2, 8, 0);

putText(frame, cv::format("%s %.2f",classNames[class_id], score), cv::Point(box.tl().x, box.tl().y-5), fontface, fontScale, cv::Scalar(255, 0, 255), thickness, 8);

}

}

float t = (cv::getTickCount() - start) / static_cast<float>(cv::getTickFrequency());

putText(frame, cv::format("FPS: %.2f", 1.0 / t), cv::Point(20, 40), cv::FONT_HERSHEY_PLAIN, 2.0, cv::Scalar(255, 0, 0), 2, 8);

cv::imshow("YOLOv10对象检测 + TensorRT8.6", frame);掌握深度学习模型部署

深度学习模型部署路线图视频课程

推荐阅读

OpenCV4.8+YOLOv8对象检测C++推理演示

YOLOv10模型结构详解与推理部署实现

ZXING+OpenCV打造开源条码检测应用

总结 | OpenCV4 Mat操作全接触

三行代码实现 TensorRT8.6 C++ 深度学习模型部署