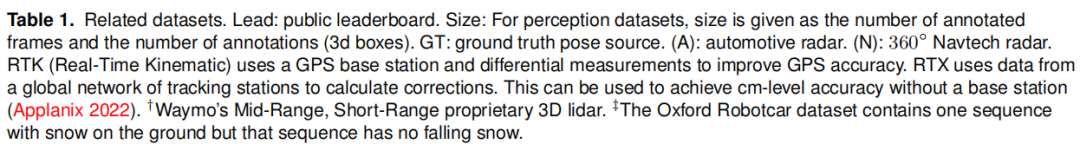

此外,我们的后处理地面真实姿态足够准确,可以支持一个公共排行榜,用于测距和度量定位。据我们所知,其他地方并没有提供一个关于度量衡定位的公共排行榜。 关于相关数据集的详细比较,见表1。

3 数据收集

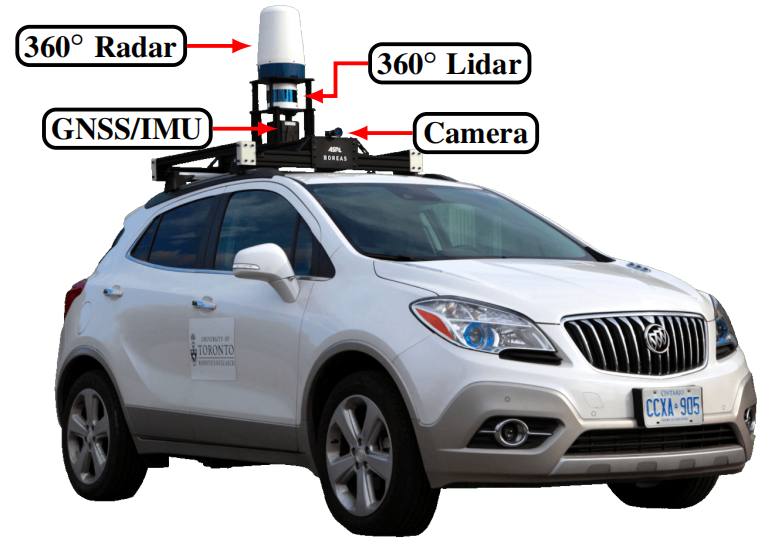

4 传感器

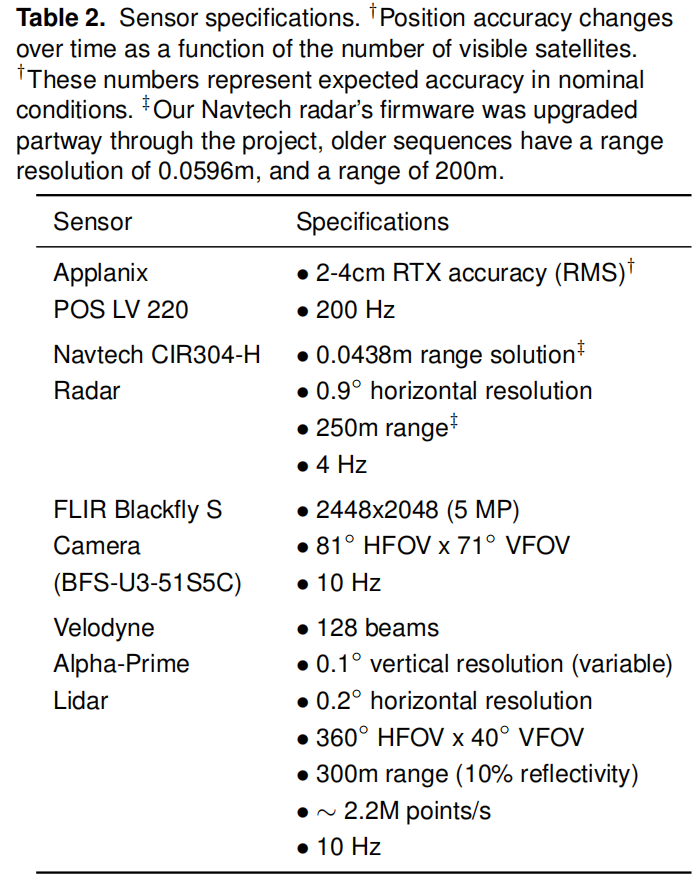

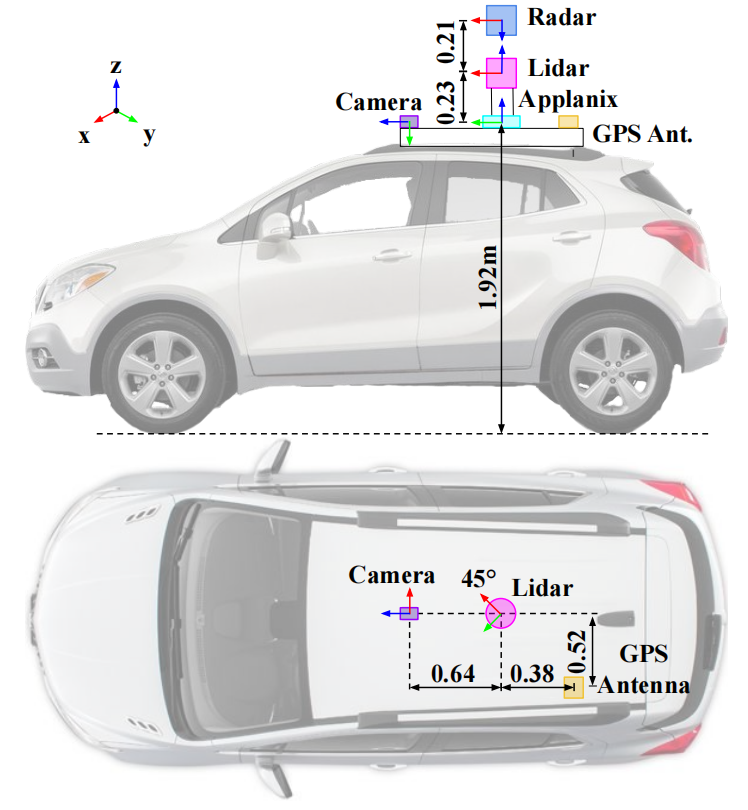

表2提供了本数据集中使用的传感器的详细规格。图6说明了Boreas上不同传感器的位置。

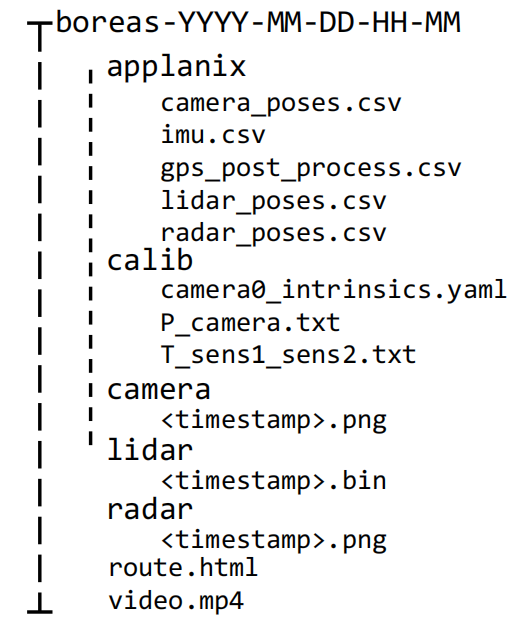

5 数据集格式

图7. 单个Boreas序列的数据组织。

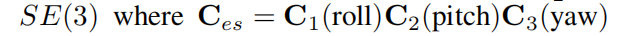

6 地面真实的姿势

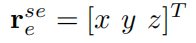

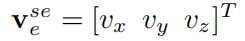

是传感器s相对于ENU的位置,以ENU测量。

是传感器s相对于ENU的位置,以ENU测量。 是传感器相对于ENU的速度,(r, p, y)是滚角、俯仰角和偏航角,可以转换为传感器框架和ENU之间的旋转矩阵。

是传感器相对于ENU的速度,(r, p, y)是滚角、俯仰角和偏航角,可以转换为传感器框架和ENU之间的旋转矩阵。 是传感器相对于ENU框架的角速度,在传感器框架内测量。那么,传感器帧的姿态是:

是传感器相对于ENU框架的角速度,在传感器框架内测量。那么,传感器帧的姿态是:

(Barfoot 2017)。我们还在Applanix/imu.csv中提供了后处理的IMU测量数据,频率为200Hz,在Applanix框架内,包括线性加速度和角速度。

(Barfoot 2017)。我们还在Applanix/imu.csv中提供了后处理的IMU测量数据,频率为200Hz,在Applanix框架内,包括线性加速度和角速度。

7 校准

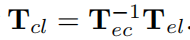

。然后,激光雷达框架中的每个点被转换到摄像机框架中,Xc = TclXl,其中Xl = [x y z 1]T。然后用以下方法获得投影图像坐标:

。然后,激光雷达框架中的每个点被转换到摄像机框架中,Xc = TclXl,其中Xl = [x y z 1]T。然后用以下方法获得投影图像坐标:

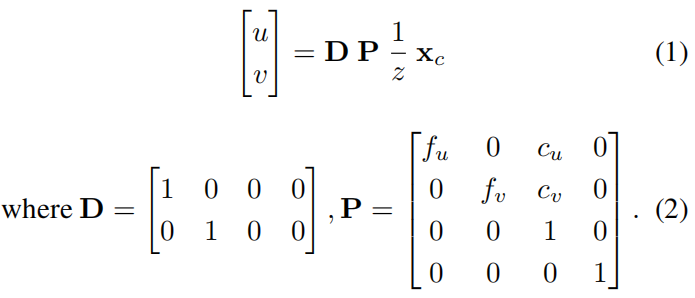

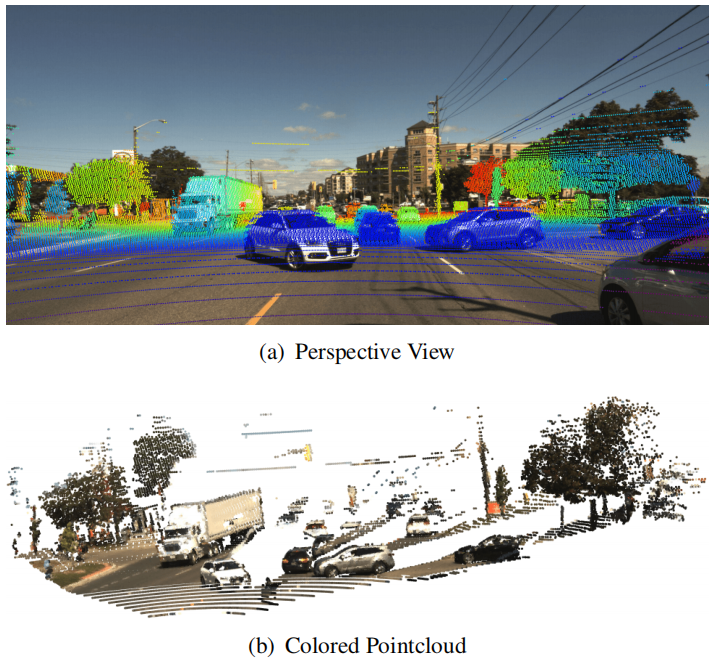

雷达到激光雷达的校准结果显示在图10中。激光雷达和Applanix参考框架之间的外征是使用Applanix的内部校准工具获得的。他们的工具将这一相对变换作为批量优化的副产品输出,旨在估计给定一连串激光雷达点云和后处理的GNSS/IMU测量的最可能的车辆路径。所有的外在校准都是以calib/文件夹下的4x4同质变换矩阵提供的。

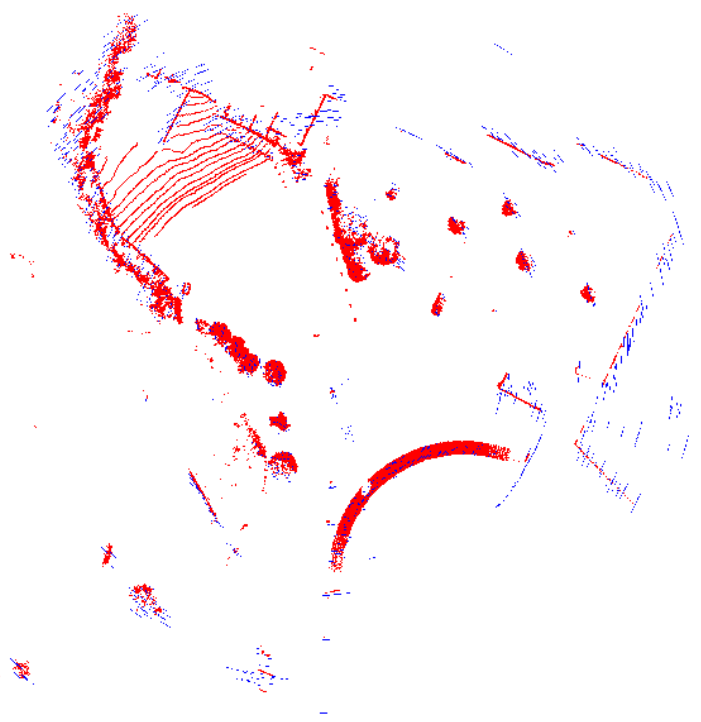

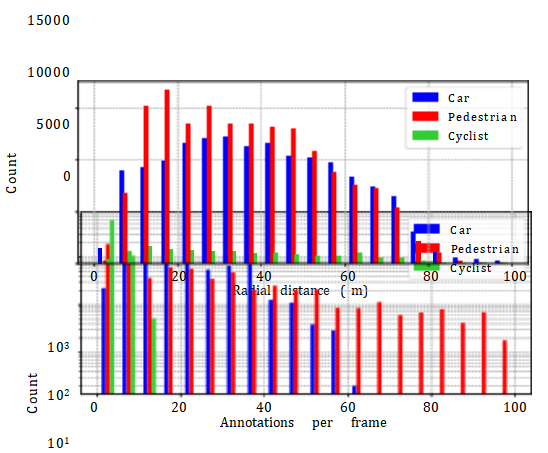

8 三维注解

图12. Boreas-Objects-V1数据集中的三维注释的例子。

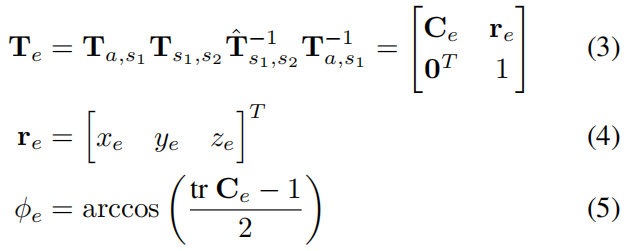

9 基准标志

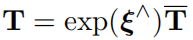

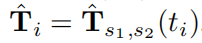

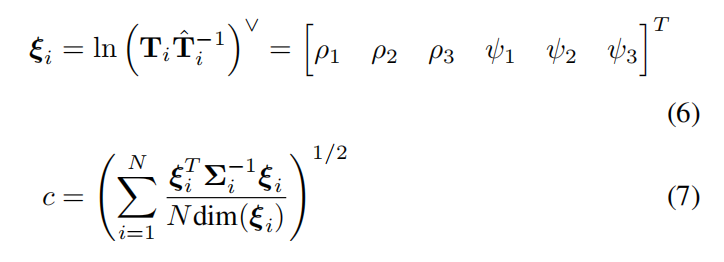

其中ξ ∼ N (0, Σ)(Barfoot 2017)。鉴于

其中ξ ∼ N (0, Σ)(Barfoot 2017)。鉴于 , 我们计算出定位和协方差估计的平均一致性分数c:

, 我们计算出定位和协方差估计的平均一致性分数c:

10 开发工具包

最后,我们以Jupyter笔记本的形式提供了几个介绍性的教程,其中包括将激光雷达投射到相机框架上和可视化3D盒子。我们的基准所使用的评估脚本将存储在开发工具包中,允许用户在提交给基准之前验证其算法。开发工具包可以在boreas.utias.utoronto.ca找到。

11 总结

参考文献:

Applanix (2022) www.applanix.com.

Barfoot TD (2017) State estimation for robotics. Cambridge University Press.

Barnes D, Gadd M, Murcutt P, Newman P and Posner I (2020) The Oxford Radar RobotCar dataset: A radar extension to the Oxford RobotCar dataset. In: IEEE International Conference on Robotics and Automation (ICRA). pp. 6433–6438.

Burnett K (2020) Radar to Lidar Calibrator.

Burnett K, Schoellig AP and Barfoot TD (2021a) Do we need to compensate for motion distortion and doppler effects in spinning radar navigation? IEEE Robotics and Automation Letters 6(2): 771–778.

Burnett K, Yoon DJ, Schoellig AP and Barfoot TD (2021b) Radar odometry combining probabilistic estimation and unsupervised feature learning. In: Robotics: Science and Systems (RSS).

Caesar H, Bankiti V, Lang AH, Vora S, Liong VE, Xu Q, Krishnan A, Pan Y, Baldan G and Beijbom O (2020) nuScenes: A multimodal dataset for autonomous driving. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). pp. 11621–11631.

Chang MF, Lambert J, Sangkloy P, Singh J, Bak S, Hartnett A, Wang D, Carr P, Lucey S, Ramanan D et al. (2019) Argoverse: 3d tracking and forecasting with rich maps. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). pp. 8748–8757.

Checchin P, G´erossier F, Blanc C, Chapuis R and Trassoudaine L (2010) Radar scan matching slam using the Fourier-Mellin transform. In: Field and Service Robotics. Springer, pp. 151– 161.

Geiger A, Lenz P, Stiller C and Urtasun R (2013) Vision meets robotics: The KITTI dataset. The International Journal of Robotics Research 32(11): 1231–1237.

Huang X, Cheng X, Geng Q, Cao B, Zhou D, Wang P, Lin Y and Yang R (2018) The ApolloScape dataset for autonomous driving. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops. pp. 954–960.

Jeong J, Cho Y, Shin YS, Roh H and Kim A (2019) Complex urban dataset with multi-level sensors from highly diverse urban environments. The International Journal of Robotics Research 38(6): 642–657.

Kim G, Park YS, Cho Y, Jeong J and Kim A (2020) MulRan: Multimodal range dataset for urban place recognition. In: IEEE International Conference on Robotics and Automation (ICRA). pp. 6246–6253.

Maddern W, Pascoe G, Linegar C and Newman P (2017) 1 Year, 1000 km: The Oxford RobotCar dataset. The International Journal of Robotics Research 36(1): 3–15.

Mathworks (2022a) Using the Single Camera Calibrator App.

Mathworks (2022b) Lidar and Camera Calibration.

Pitropov M, Garcia DE, Rebello J, Smart M, Wang C, Czarnecki K and Waslander S (2021) Canadian adverse driving conditions dataset. The International Journal of Robotics Research 40(4- 5): 681–690.

Scale (2022) www.scale.com.

Sheeny M, De Pellegrin E, Mukherjee S, Ahrabian A, Wang S and Wallace A (2021) RADIATE: A radar dataset for automotive perception in bad weather. In: IEEE International Conference on Robotics and Automation (ICRA). pp. 1–7.

Sun P, Kretzschmar H, Dotiwalla X, Chouard A, Patnaik V, Tsui P, Guo J, Zhou Y, Chai Y, Caine B et al. (2020) Scalability in perception for autonomous driving: Waymo open dataset. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). pp. 2446–2454.

Understand (2022) Understand.ai Anonymizer.

分享不易,恳请点个【👍】和【在看】